The Problem and the Solution

LLMs Are Not Enough. Agents Are the Answer. But Agents Are Hard.

I. What Changed in 2026

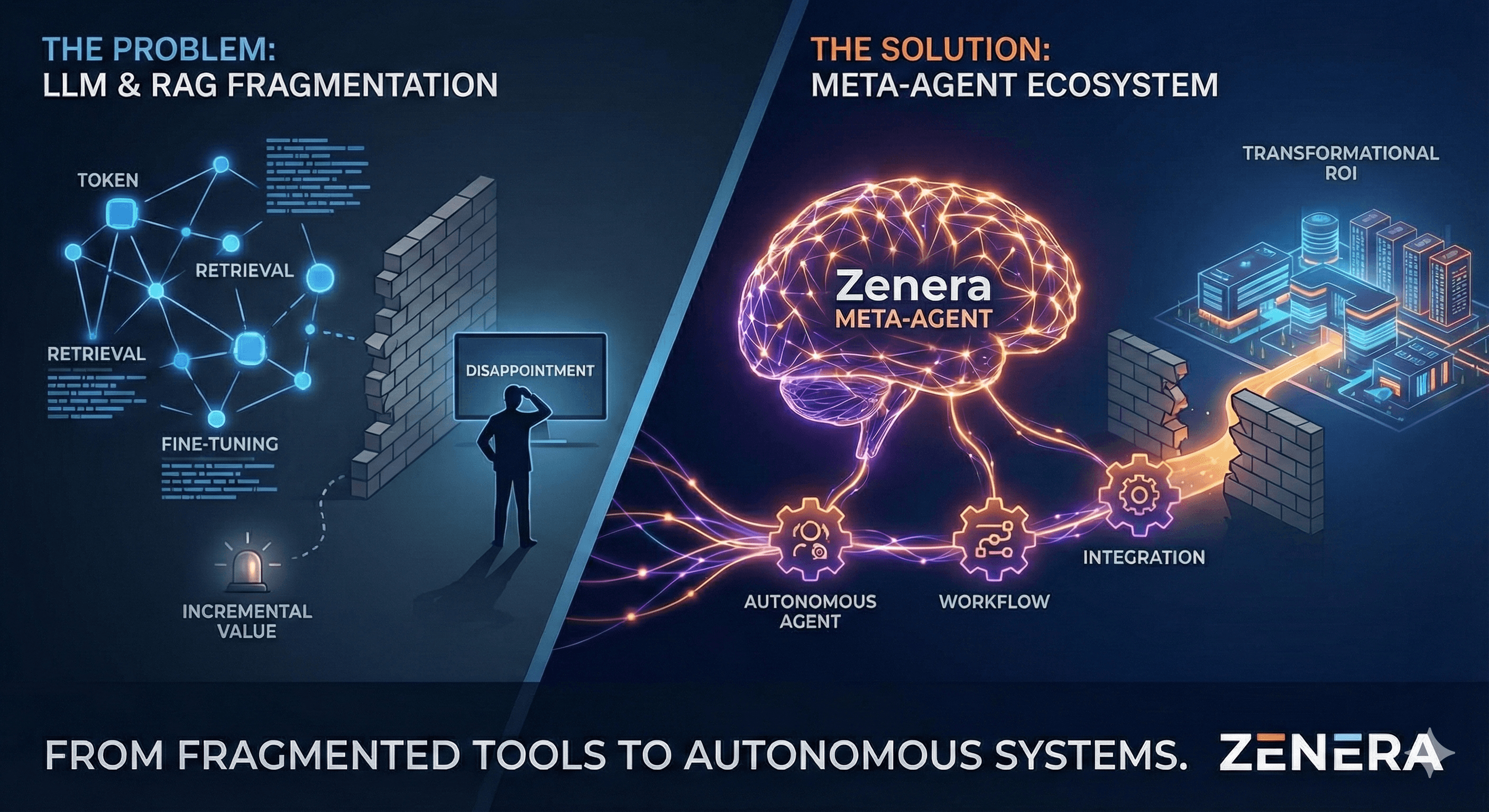

The First Wave Failed

The enterprise AI investment cycle of 2023–2025 followed a predictable arc. Organizations procured GPU clusters, licensed foundation models, and deployed teams of engineers to build retrieval pipelines. The dominant pattern-LLM + RAG + fine-tuning-became the de facto enterprise AI stack.

It delivered disappointment at scale.

RAG is a retrieval improvement, not an intelligence upgrade. It reduces hallucinations on document Q&A and extends context reach-but it does not change what the model fundamentally does. It answers a question and stops. It does not navigate a system, execute a workflow, or act on behalf of the business. The model remains passive. The human remains in the loop for everything that matters.

Fine-tuning compounds the illusion. It shapes model behavior on a static snapshot of tasks, requiring expensive data curation, ML expertise, and continuous retraining as business logic evolves. It optimizes for yesterday. Enterprise processes move faster than fine-tuning cycles.

The honest accounting: most enterprises spent two years building sophisticated ways to make a language model answer questions slightly better. The ROI was marginal. The capability gap between "impressive demo" and "production-grade business value" remained vast.

The Second Wave Proved the Thesis

2026 changed the conversation-not with a new model release, but with evidence from production.

Agents have already transformed an entire industry. In software development, coding agents like Claude Code, Codex, and Cursor delivered productivity gains that would have been dismissed as fantasy just two years ago. Developers report 2–10x throughput improvements on complex tasks. Multi-file refactors that took days happen in minutes. Entire features are architected, implemented, tested, and shipped by agents operating autonomously across codebases of millions of lines. This is not incremental. It is a category-level shift in how software gets built.

Now the same transformation is crossing into every other vertical. Agentic systems-AI that acts, observes outcomes, adapts, and operates autonomously across complex workflows-are delivering measurable value in environments where RAG-based approaches had stalled. They file regulatory submissions, orchestrate supply chain recovery, synthesize research across thousands of documents and databases, execute multi-step procurement workflows, run compliance audits, and manage end-to-end hiring pipelines-without human intervention. What coding agents proved in software, enterprise agents are now proving in finance, healthcare, legal, manufacturing, and operations. The verticals are not just adopting agents independently-they are joining: supply chain agents trigger procurement agents, compliance agents feed legal agents, engineering agents coordinate with operations agents. The agentic wave is converging across the enterprise.

The ROI numbers from early adopters are not incremental. They are transformational. The reason is structural: agents replace entire workflows, not just assist with individual tasks. A well-built agentic system doesn't make a knowledge worker 20% more efficient. It eliminates entire categories of manual work.

The technology has crossed a threshold. The question is no longer whether agents can deliver enterprise value. It is whether your organization can build them.

II. The Two Hard Problems

The opportunity is clear. But between recognizing that agents work and deploying agents that work reliably in your enterprise, two hard problems stand in the way.

Problem 1: Building Agents Is Nothing Like Building Software

Building a production-grade agentic system is an entirely different discipline from traditional software engineering-and most organizations discover this too late.

Traditional software has a compiler. It has a type system. A syntax error fails immediately. A logic error is reproducible and debuggable. The tools of the trade-tests, linters, formal specifications-exist precisely to catch errors before they reach production.

Agentic systems have none of this.

There is no compiler that tells you your tool description is ambiguous. There is no linter that detects a semantic contradiction between two agents' system prompts that will cause them to loop indefinitely. There is no type checker that verifies your handoff schema is actually parseable by the receiving agent. There is no test suite that reliably predicts agent behavior across the distribution of real-world inputs-because agents are not deterministic functions. They are probabilistic reasoners operating in open-ended environments.

The failure modes are invisible until they are catastrophic:

- Prompt contradictions - Two agents in the same system receive conflicting instructions. In most situations, nothing breaks. In one edge case, they enter a handoff loop that processes tens of thousands of tokens before a timeout kills the workflow.

- Tool fragility - A single change to a tool definition-a renamed parameter, a modified description-can silently alter how the agent reasons about when and how to call it. The system still runs. It produces subtly wrong results.

- LLM dependency - Swap the underlying model for a newer version with better benchmarks, and agents that operated reliably begin mis-routing, mis-interpreting, or abandoning tasks mid-execution. The agents were tuned for a specific model's behavioral distribution. The new model has a different one.

- Runaway execution - Without explicit trajectory bounds, agents explore. They call tools in combinations that were never intended. They generate sub-tasks that spawn further sub-tasks. Costs compound. Systems degrade.

- Infrastructure mismatch - Agents designed without awareness of the runtime environment-retry semantics, timeout behavior, concurrency limits, storage guarantees-fail in ways that are nearly impossible to reproduce outside production.

This is not traditional software development. It is closer to coaching a team of highly capable but unpredictable specialists: defining scope, setting limits, establishing communication protocols, verifying that they understand each other, and monitoring for drift. It requires deep expertise in how large language models reason, not just in how to call their APIs.

And here is the uncomfortable truth: humans will never be good enough at this. Agentic systems are fundamentally fuzzy-sprawling configurations of natural-language prompts, loosely typed tool schemas, probabilistic handoff logic, and emergent multi-agent interactions. No matter how much training an engineer receives, the human brain is not built to hold the full state of a complex multi-agent system in working memory, detect subtle semantic contradictions across dozens of prompts, or predict how a model will interpret a tool description under every possible input distribution. The error surface is too large, too ambiguous, and too context-dependent for manual engineering to master.

The solution is not more training. The solution is the right tool.

Solution: The Zenera Meta-Agent - Vibe-Coding for Multi-Agent Systems

Zenera's Meta-Agent is that tool. LLMs working as agents are exceptionally good at exactly this kind of task-reasoning over large bodies of loosely structured text, detecting inconsistencies, verifying semantic coherence, and generating well-coordinated system configurations. They exceed human capabilities at agent design by orders of magnitude, for the same reason coding agents exceed humans at large-scale refactoring: the task is a natural fit for how these models reason.

With the Zenera Meta-Agent, any person with a modest technical background can build, test, and deploy sophisticated multi-agent systems in minutes-systems comparable to Claude Code, Codex, or OpenClaw for development workflows, or purpose-built vertical agents for legal, hiring, engineering, finance, healthcare, and operations. No deep ML expertise. No months of prompt engineering. No trial-and-error debugging of handoff loops.

Think of it as vibe-coding for multi-agent systems. You describe what you need. The Meta-Agent architects, generates, validates, and deploys a production-grade agentic system-coherent, governed, and ready to run.

When you describe a problem, the Meta-Agent does not return a chatbot. It architects a complete, cohesive multi-agent system: role decomposition, system prompts, tool definitions, handoff protocols, approval workflows, UI components, and integration code. Every element is designed together, not assembled from parts. Semantic consistency across agents is verified before deployment. Handoff schemas are validated. Trajectory bounds are set. Termination conditions are guaranteed.

Most engineering teams are not equipped for manual agent construction. They don't need to be. The Meta-Agent closes the expertise gap entirely.

Problem 2: The Integration Wall

Even when an organization solves the agent-building problem, it collides with a second hard reality: the systems agents need to operate on were never designed for them.

The AI industry has converged on Model Context Protocol as the standard way to expose system capabilities to agents. When MCP is implemented well, it works. It gives agents clean, typed, discoverable tool surfaces. It makes integration tractable.

But MCP covers a fraction of what enterprise agents actually need to touch-and even where it applies, it creates problems of its own.

For systems that already expose a well-defined API-REST, GraphQL, gRPC-MCP introduces a parallel API surface that must be built, maintained, and kept in sync with the underlying interface. This is not free. Every MCP server is a new codebase: new authentication handling, new error semantics, new versioning concerns, new deployment artifacts-all duplicating logic that already exists in the native API. For organizations with hundreds of internal services, MCP doesn't simplify integration. It doubles the integration surface area. And when the native API changes, the MCP layer must change too-or agents silently break.

Real enterprise environments compound this further. They are not populated with modern SaaS platforms that ship polished MCP servers alongside their REST APIs. They are populated with SAP instances from 2009, custom COBOL batch jobs, ERP systems with undocumented SOAP endpoints, data warehouses with no API layer at all, and critical workflows that live entirely inside someone's spreadsheet. These systems run the business. They will not be replaced. And there is no MCP connector for them.

Building MCP coverage for a large enterprise's full system landscape is a multi-year project. Every API must be studied, every authentication scheme handled, every edge case documented, every tool definition carefully worded-because MCP tool definitions are not inert metadata. They directly shape agent behavior. A poorly written tool description causes agents to misuse the tool. A missing parameter description causes agents to hallucinate values. An imprecise schema causes agents to call the wrong tool entirely.

And coverage, once built, does not stay built. Enterprise systems change continuously. A new version of the ERP adds a function. An API is deprecated. An authentication scheme rotates. Each change must be reflected in the MCP layer-and each change to the MCP layer is a potential regression in agent behavior. This failure mode is unprecedented in traditional software: changing a function signature has always broken callers at compile time. In agentic systems, changing a tool definition breaks agents at runtime, silently, and the symptoms may not surface for days.

The practical result: most enterprises find that connecting agents to their actual systems-not toy demos, not greenfield SaaS-takes months of dedicated integration engineering per system. The agents are ready before the integrations are. The ROI case that looked compelling on paper is deferred indefinitely.

Solution: Self-Coding Agents - Integration on Demand

Zenera breaks the integration wall entirely. Instead of requiring pre-built connectors or MCP servers for every system, Zenera agents are self-coding: they generate integration code on demand. When an agent needs to interact with a system-whether it has a REST API, a SOAP endpoint, a legacy protocol, or a barely documented internal interface-the agent explores the available API surface, reasons about documentation and response patterns, and synthesizes the necessary integration code at runtime. That code is executed in a sandboxed container, validated against expected behavior, and persisted once it works.

Integration is no longer a burden. It is an autonomous capability.

This means agents reach systems that no MCP registry will ever cover-and they do it without months of upfront engineering. When a tool definition or API changes, the Meta-Agent evaluates the impact across the full agent graph and adjusts dependent agents before the change reaches production. The integration bottleneck that stalls most enterprise agent projects simply does not exist in Zenera.

III. The Complete Platform

The Meta-Agent and self-coding integrations do not operate in isolation. They run on a purpose-built enterprise runtime that provides what production agents actually need:

- Transactional storage - Agents operate on LakeFS-backed object storage with git-like branch/merge semantics. Changes are atomic. Every mutation is versioned. Concurrent agents cannot corrupt shared datasets.

- Durable workflows - Powered by Temporal, agent workflows survive pod restarts, node migrations, and network partitions. State is persisted at every decision point. Multi-step processes that span hours or days do not lose progress.

- Event-reactive activation - Agents wake on storage events, workflow signals, external webhooks, or human interactions. Every trigger is transactionally paired with its handler-no event is ever lost.

- Enterprise governance - RBAC, audit logs, approval workflows, and compliance policies are native to the platform. Every agent decision is traced end-to-end with full observability.

The Meta-Agent operates with full knowledge of this runtime. Generated agents are purpose-built for the platform they run on-not designed in the abstract and thrown into an unfamiliar environment. After deployment, the Meta-Agent does not disappear. Every modification to a running system-a new tool, a changed workflow, an updated integration-is evaluated for consistency against the full system architecture before it reaches production. It learns from runtime execution feedback: trajectories that succeed, edge cases that surface unexpected behavior, performance patterns that suggest prompt adjustments.

The result is agentic systems that are genuinely native to the enterprise-connected to its real systems, governed by its actual policies, and sustained through its continuous changes.

IV. The 2026 Imperative

What Has Changed

The enterprise AI landscape in 2026 is fundamentally different from a year ago. Three shifts have converged:

-

Agents are proven, not theoretical. Coding agents demonstrated transformational productivity gains in software. The same agentic architecture is now delivering results across finance, healthcare, legal, manufacturing, and operations. The debate is over. Agents work.

-

The gap is widening, not closing. Organizations that deployed production-grade agentic systems early now have structurally different cost structures, cycle times, and capabilities. Their agents learn from production traffic, generate fine-tuning data from real workflows, and improve measurably over time-without additional engineering investment. Every month of delay compounds the disadvantage.

-

The window is finite. Agentic transformation is not a gradual adoption curve. It is a capability threshold. Once competitors operate with agents that eliminate entire categories of manual work, the organizations still relying on RAG-enhanced chatbots are not slightly behind-they are structurally uncompetitive.

The Opportunity

Any enterprise can now deploy agents that replace entire workflows-not just assist with tasks. The technology is ready. The ROI is proven. The use cases span every department: compliance autopilots, autonomous operations centers, internal research systems, application generators, hiring pipelines, supply chain orchestration. The question is not whether to build agents, but how fast you can get them into production.

The Challenge

Two barriers block most organizations. First, building production-grade multi-agent systems requires a discipline that does not exist at scale-and that humans are fundamentally ill-suited to master manually. Second, connecting agents to the real enterprise system landscape-legacy APIs, undocumented endpoints, decades of accumulated infrastructure-takes months per system using conventional approaches.

These barriers are not declining. As agentic systems grow more sophisticated and enterprises demand deeper integration, the engineering complexity and integration burden only increase.

The Zenera Answer

Zenera eliminates both barriers.

The Meta-Agent turns agent construction from a months-long engineering discipline into a conversation. Describe the problem. Get a production-grade multi-agent system-architected, validated, governed, and deployed. Any person with a modest technical background can build systems comparable to Claude Code or purpose-built vertical agents for legal, finance, healthcare, and operations-in minutes.

Self-coding integration removes the dependency on pre-built connectors. Agents reach any system-REST, SOAP, legacy, undocumented-by synthesizing their own integration code at runtime. The multi-year integration roadmap collapses to hours.

The enterprise-grade runtime provides what production agents actually need: transactional storage, durable workflows, event-reactive activation, and native governance.

The organizations that will define competitive advantage in the next decade are not those that deployed better chatbots. They are those that built better agents-and did it before that window closed.

Zenera exists to make that possible. Reliably. At enterprise scale. Starting now.