Zenera Agentic Platform

Enterprise-Grade AI Agent Infrastructure for Mission-Critical Operations

Zenera is a production-ready agentic AI platform engineered for enterprises that demand reliability, scalability, and full operational control. Unlike fragmented AI toolkits, Zenera provides a unified infrastructure layer where autonomous agents operate with transactional guarantees, survive infrastructure failures, and integrate seamlessly with complex enterprise ecosystems.

"The platform eliminates the undifferentiated heavy lifting of agent infrastructure, allowing teams to focus on the business logic that creates competitive advantage."

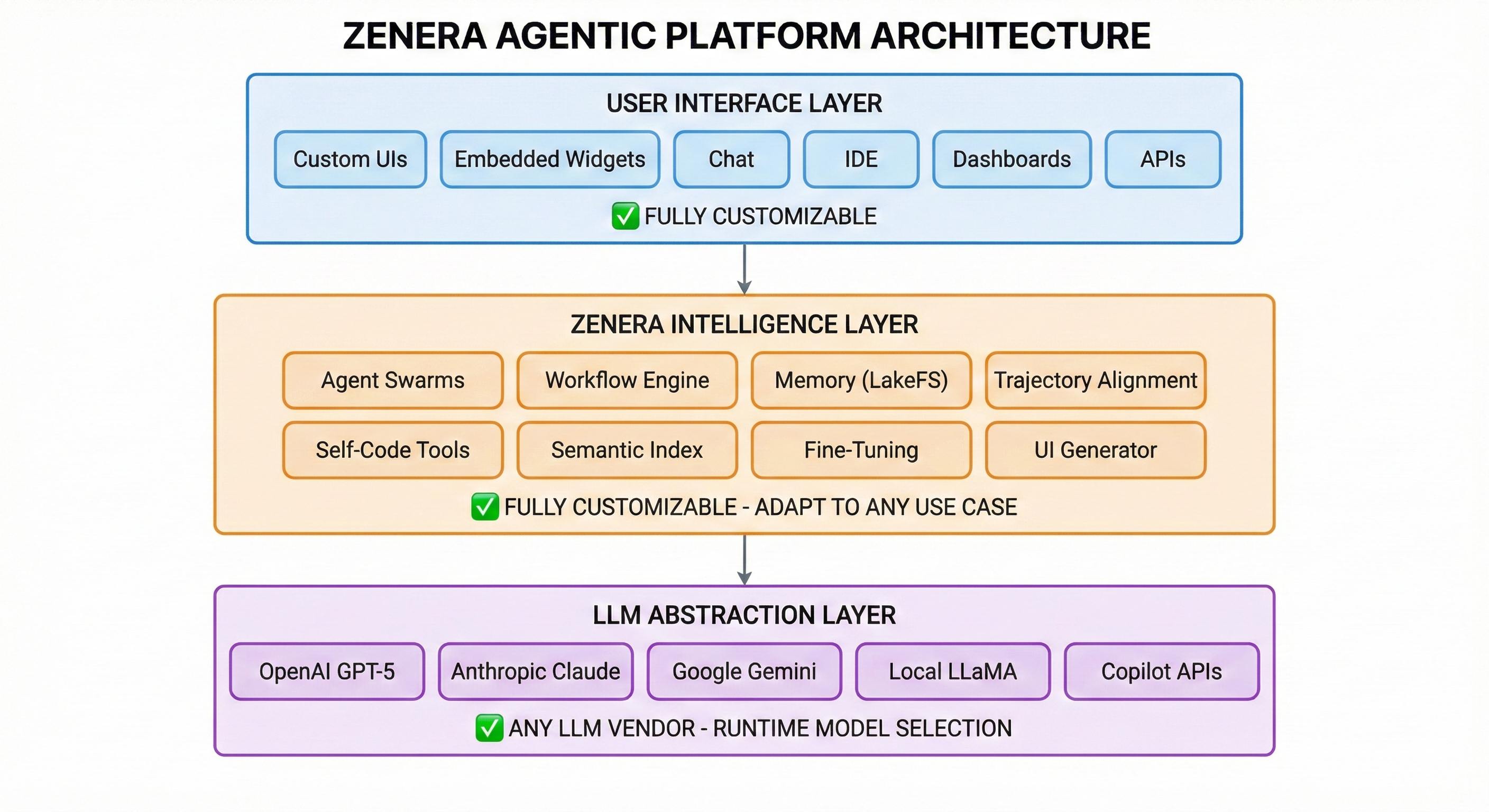

Zenera Agentic Platform Architecture

Core Technical Capabilities

12 enterprise-grade capabilities engineered for production AI agent deployments.

Transactional Object Memory

Problem Solved

AI agents operating on enterprise data require ACID-like guarantees. Traditional agent frameworks treat storage as an afterthought, leading to data corruption, race conditions, and unrecoverable states.

Zenera Architecture

Git-like versioning at scale -- Built on LakeFS over MinIO, providing branch/merge semantics for multi-gigabyte datasets

Atomic operations -- Agents read and write to isolated branches; changes are committed atomically or rolled back entirely

Full lineage tracking -- Every data mutation is versioned with complete provenance, enabling audit trails and rollback to any point in time

Concurrent agent safety -- Multiple agents can operate on shared datasets with optimistic concurrency control and conflict resolution

Agents can safely transform terabytes of structured and unstructured data with the same confidence developers have in database transactions.

Fault-Tolerant Workflow Orchestration

Problem Solved

Complex agent workflows span hours or days, involve external API calls, and must survive node failures, network partitions, and Kubernetes pod migrations without losing state.

Zenera Architecture

Durable execution engine -- Powered by Temporal, workflows persist their execution state to durable storage at every decision point

Automatic retries with exponential backoff -- Failed activities are retried with configurable policies; human escalation paths are first-class citizens

Cross-node migration -- Workflows seamlessly resume on different nodes after failures -- no checkpointing logic required in agent code

Long-running process support -- Workflows can pause for days awaiting human approval, external events, or scheduled triggers

Heterogeneous environment resilience -- Designed for real enterprise Kubernetes deployments where nodes come and go unpredictably

Multi-step agent workflows that would require months of custom infrastructure engineering work out of the box.

Self-Coding Agents

Problem Solved

Enterprise systems expose thousands of APIs across decades of technology generations. Pre-built integrations cover a fraction; MCP-style tool registries require extensive upfront engineering.

Zenera Architecture

Runtime code synthesis -- Agents dynamically generate, validate, and execute integration code when encountering unfamiliar APIs or data formats

Sandboxed execution -- Generated code runs in isolated containers with strict resource limits and network policies

Learning loop -- Successfully synthesized tools are persisted, reviewed, and promoted to the standard toolchain

Legacy system bridging -- Agents can interface with SOAP services, mainframe terminals, proprietary protocols, and undocumented APIs by reasoning about response patterns

MCP compatibility -- Full support for Model Context Protocol when standardized tools are available and preferred

One agent integrated with a 15-year-old ERP system in hours -- a task that previously required months of dedicated integration development.

AI-Powered Agent Orchestration & Alignment

Problem Solved

As agent swarms grow, emergent conflicts arise: contradictory system prompts, infinite handoff loops, and trajectories that never terminate. Manual alignment doesn't scale.

Zenera Architecture

Trajectory prediction engine -- Before execution, AI analyzes the full graph of possible agent handoffs and tool invocations to identify loops, dead ends, and conflicts

Prompt consistency verification -- System prompts across all agents in a workflow are analyzed for semantic contradictions and ambiguities

Runtime alignment correction -- During execution, the orchestrator can inject clarifying context to prevent detected misalignments

Guaranteed termination -- Trajectories are verified to have well-defined exit conditions; non-terminating patterns are flagged before deployment

Swarm coordination -- Agents operating in parallel are given mutually consistent views of shared state and objectives

The orchestration layer treats agent coordination as a first-class AI problem, not an afterthought requiring manual prompt engineering.

Integrated Model Fine-Tuning Pipeline

Problem Solved

Enterprises cannot depend on generic foundation models for domain-specific tasks. Manual fine-tuning requires ML expertise and disconnected toolchains.

Zenera Architecture

Model abstraction layer -- Agents are decoupled from specific models; the runtime selects optimal models based on task characteristics, latency requirements, and cost constraints

Automatic dataset collection -- Every agent interaction is traced; high-quality examples are automatically curated for fine-tuning datasets

SFT and preference tuning -- Integrated supervised fine-tuning and DPO/RLHF pipelines optimize models on collected trajectories

Performance regression detection -- Fine-tuned models are evaluated against held-out test sets before promotion

Seamless model hot-swap -- Updated models are deployed without agent code changes or workflow restarts

The platform continuously learns from production traffic, automatically improving model performance without dedicated ML operations.

Semantic Memory Index (Integrated RAG)

Problem Solved

Agents need access to gigabytes of enterprise knowledge -- documents, images, diagrams, tables -- with sub-second retrieval and multimodal reasoning.

Zenera Architecture

Hybrid vector + lexical search -- OpenSearch-based SemanticDB combines dense embeddings with BM25 for optimal recall across query types

Multimodal indexing -- Text, images, diagrams, and scanned documents are indexed with modality-specific encoders

Hierarchical chunking -- Documents are decomposed into semantically coherent chunks with preserved structural relationships

Broad format support -- PDF, DOCX, XLSX, images, CAD files, and proprietary formats ingested through extensible parser pipeline

Real-time sync -- Knowledge bases stay current with incremental indexing from source systems

Agents reason over the complete enterprise knowledge graph, not just recent context windows.

Integrated Development Environment

Problem Solved

Building, testing, and monitoring agent systems requires jumping between disconnected tools -- chat interfaces, code editors, observability dashboards.

Zenera Architecture

Unified agent IDE -- Edit prompts, tool definitions, and workflow logic in a purpose-built environment

AI-assisted authoring -- Generate agent definitions, system prompts, and tool schemas from natural language specifications

Trajectory visualization -- Graphically explore possible execution paths; identify alignment issues before deployment

Live debugging -- Step through agent execution, inspect intermediate states, and modify behavior in real-time

Version control integration -- All agent artifacts are versioned with Git-compatible semantics

The development experience is engineered for agent builders, not retrofitted from chatbot frameworks.

Enterprise Observability Stack

Problem Solved

Production AI systems require the same operational visibility as traditional infrastructure -- but with agent-specific telemetry.

Zenera Architecture

Full Grafana integration -- Pre-built dashboards for agent performance, model latency, error rates, and resource utilization

Distributed tracing -- Every agent decision, tool call, and model invocation is traced with OpenTelemetry compatibility

Log aggregation -- Centralized logging with Loki; structured logs include agent context, session IDs, and correlation tokens

Alerting pipelines -- Configurable alerts for SLA violations, error spikes, and anomalous agent behavior

Cost attribution -- Token usage and compute costs are tracked per agent, per workflow, and per tenant

Operations teams manage agent infrastructure with the same tools and practices they use for traditional services.

Cloud-Native Deployment Model

Problem Solved

Enterprises need deployment flexibility -- from developer laptops to air-gapped private clouds -- without re-architecting the platform.

Zenera Architecture

Kubernetes-native -- Helm charts and operators for production-grade deployment on any conformant cluster

Desktop mode -- Full platform functionality in a single Docker Compose stack for development and edge deployment

Multi-tenancy -- Namespace isolation, resource quotas, and RBAC for shared cluster deployments

Hybrid cloud support -- Agents can execute across cloud boundaries with secure cross-cluster communication

Offline operation -- Core functionality operates without internet connectivity; local models supported

Deploy anywhere -- from a MacBook to a private Kubernetes cluster in a regulated data center.

Traceability & Explainability

Problem Solved

Regulated industries require AI systems to justify every decision. Black-box agents are non-starters for compliance.

Zenera Architecture

Decision logging -- Every agent decision captures the reasoning chain, retrieved context, and selected action with confidence scores

Audit trails -- Immutable logs satisfy SOC 2, HIPAA, and financial services audit requirements

Counterfactual analysis -- Replay decisions with modified context to understand sensitivity to inputs

Human-readable explanations -- Agents generate plain-language justifications suitable for non-technical stakeholders

Regulatory report generation -- Automated extraction of decision logs into compliance-ready formats

Agents operating in Zenera are not black boxes -- every decision is traceable to source context and reasoning.

Automatic UI Generation

Problem Solved

Most agent platforms are chat-first, forcing users into conversational interfaces for tasks better served by structured UIs.

Zenera Architecture

Dynamic interface synthesis -- Agents generate interactive forms, tables, charts, and dashboards tailored to the task at hand

Component library -- Rich widget set including data grids, visualization components, input forms, and approval workflows

Real-time binding -- Generated UIs are live-bound to agent state; updates propagate instantly

Responsive design -- Interfaces adapt to desktop, tablet, and mobile form factors

Embedding support -- Generated UIs can be embedded in existing enterprise portals and applications

Zenera agents are not chatbots -- they deliver purpose-built interfaces that match the complexity of enterprise workflows.

In-App Vibe Coding

Problem Solved

Business users want to go beyond one-off tasks -- they want to create reusable applications without waiting for IT development cycles.

Zenera Architecture

Natural language application definition -- Users describe desired functionality; the platform synthesizes complete applications

Enterprise integration -- Generated applications connect to live data sources, respecting existing access controls

Persistence and sharing -- Applications are saved, versioned, and shareable across the organization

Live data binding -- Applications always display current data; no stale exports or manual refreshes

Governance integration -- Generated applications are subject to the same approval and audit workflows as IT-delivered software

Users don't just run agents -- they create production applications that integrate seamlessly into enterprise systems and remain current automatically.

Summary

Zenera is infrastructure for enterprises that treat AI agents as production systems, not experiments. Every capability -- from transactional storage to automatic fine-tuning -- is engineered for the operational realities of heterogeneous enterprise environments.

The platform eliminates the undifferentiated heavy lifting of agent infrastructure, allowing teams to focus on the business logic that creates competitive advantage.

Request a Demo