The Death (and Rebirth) of Enterprise AI

The Death (and Rebirth) of Enterprise AI

AI is no longer an experiment. The rules have changed.

For the last few years, enterprise AI has lived in a safe place. Labs. Demos. Pilots. POCs. It was fine if things broke. It was fine if the results were inaccurate. It was fine if no one could explain how the system worked. Budgets were exploratory. Expectations were low. Failure was justified as 'learning'. That era is over. In 2026, boards have started asking for results and ROI. They're demanding accountability. Customers are expecting reliability. AI is no longer treated like a research project. It is now expected to behave like infrastructure. And this is where most enterprise AI initiatives are collapsing.

In 2026, 'It Works in the Demo' Is No Longer Acceptable

Most AI initiatives still start the same way: Capability over outcomes. That approach worked 5 years ago. Today, the same 'highly-capable' AI demos only impress leadership for one meeting. Then they fall apart. This is why so many enterprise AI projects die after the pilot phase.

The Bar to Judge AI Just Got Higher

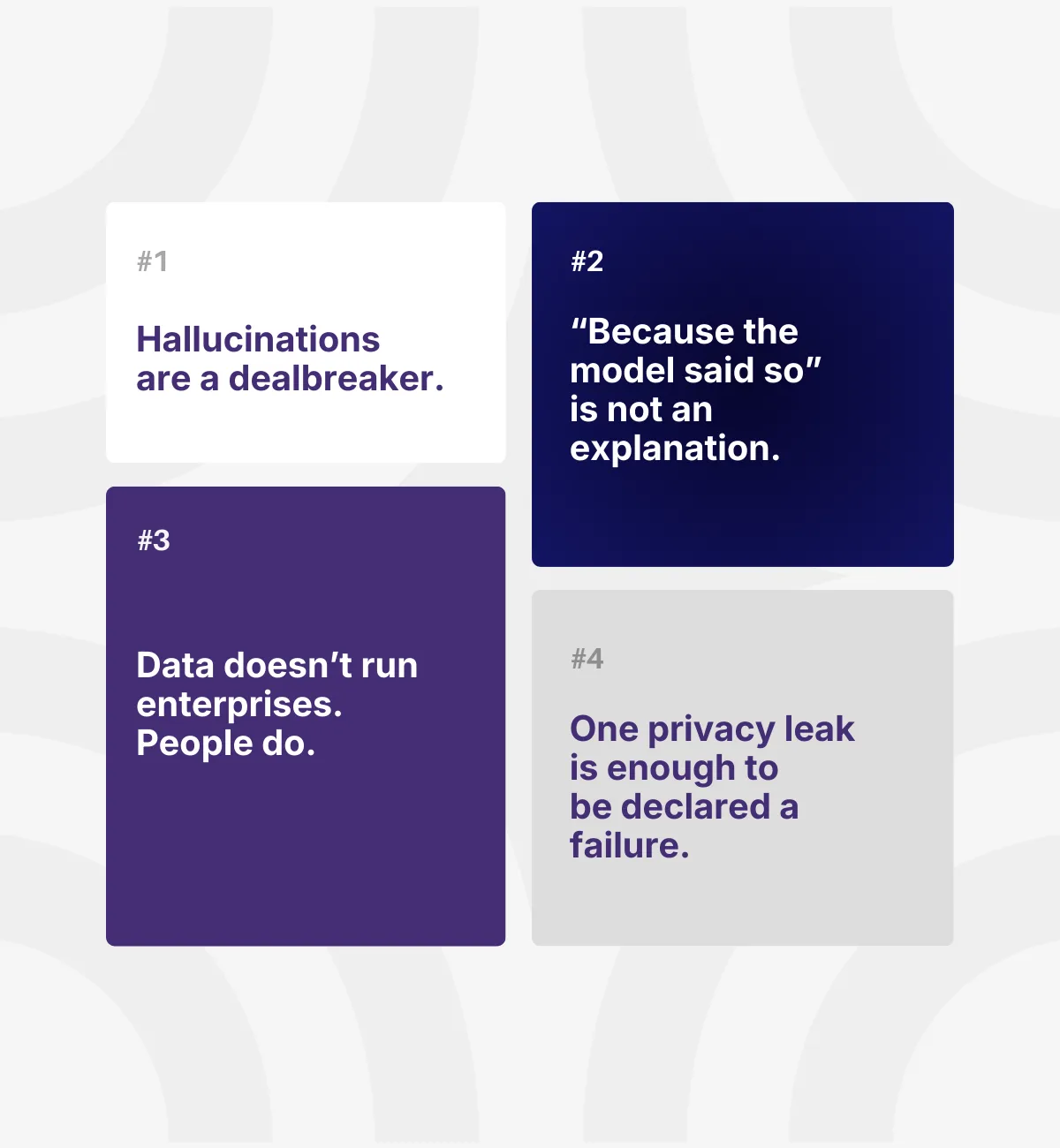

Enterprise AI is now being judged by the same standards as databases, billing systems, and security infrastructure. That means, what result is being generated is far more important than what the AI is capable of. ROI-positive, is what everyone seeks. Today, four things matter. Just four. If you miss even one, I guarantee you, your initiative will collapse.

Hallucinations Are a Dealbreaker

In content generation, inaccuracies are annoying. In enterprise decision-making, they are unacceptable.

There's absolutely no space to:

- tolerate hallucinations

- rely on probabilistic guesses

- review outputs manually forever

If AI is involved in operations, decisions, or workflows, it must behave deterministically within constraints.

"Because the Model Said So" Is Not an Explanation

Enterprises need to know:

- how a decision was made

- what data was used

- which logic paths were followed

- why one action was chosen over another

Especially when AI recommendations affect people, money, risk, and compliance. If an AI is recommending you to fire an important talent in your company, it better explain why. Explainability has to exist at the execution and reasoning level, not as a post-hoc story. If you can't explain it, you can't operate it.

Data Doesn't Run Enterprises. People Do.

This is the most overlooked dimension. Most enterprises think that they lack data. In reality, they lack institutionalized (human) knowledge.

Every organization runs on tribal logic:

- who to involve

- what to validate

- when to escalate

- which shortcuts are acceptable

- which mistakes are fatal

Most AI systems aren't ready to tackle this. They learn from data. But they don't learn from experts. And that is extremely problematic, especially for the large scale systems. A great enterprise AI is the one that allows domain experts to instruct the system, correct it, guide future behavior, and encode judgment, not just rules. If AI cannot learn from the people who actually run the business, it will always remain replicable.

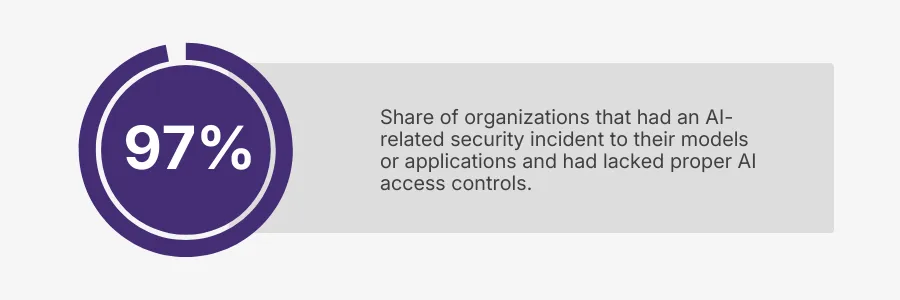

One Privacy Leak Is Enough to Be Declared a Failure

Privacy and Security are table stakes for enterprise AI deployments. Enterprise data is not sandbox data. Some information is regulated. Some are extremely confidential. Some cannot leave the system. Some cannot even be exposed internally. Yet many AI stacks go cloud-first by default. That works until it doesn't. One leak, one accidental exposure, one compliance failure, and the entire initiative gets shut down. If AI cannot meet enterprise-grade privacy and security requirements by design, it will never make it past governance.

How to Make Enterprise AI That Doesn't Fall Apart

What's happening now is more than your average 'AI upgrade cycle.' It's a shift that is going to define the next decade of enterprise AI. Similar to the shift from on-prem software to cloud infrastructure. Or similar to the shift from ad-hoc scripts to reliable platforms. As Darwin's Theory suggests: Throughout evolution, organisms that are the most adaptable are the ones that survive the tides. And we've witnessed this in the tech world too. Teams that re-architected early won. Teams that treated it as a feature lost. The same pattern is playing out again. I can bet this: AI stacks built for experimentation will not survive the ROI phase. They will either be paused indefinitely, or quietly decommissioned after failure. The winners will be the enterprises that treat AI as infrastructure from day one. That's where real ROI comes from.

The Internal Audit

Before you move on, I'd say: pause for a moment. Screenshot the question below and share it in your team slack. The discussion it triggers will be far more revealing than any AI roadmap out there.

This shift is exactly why we built Zenera the way we did. I understand thinking about all these 4 dimensions at the same time can be overwhelming. If you're a founder, CTO, or VP Product who's tried to deploy AI into real software and felt the friction I just talked about, let's talk.

Signing off,

Ramu Sunkara

Co-founder, CEO at Zenera AI